When I say thinking, I don’t mean the kind of thinking that fills most of our lives. It’s not that cognitively difficult to pick what to eat for lunch, what to wear to work, what TV show to watch, loading the dishwasher. Most of your day is entirely routine and simple. It still tires you out of course but it’s not demanding you to be rationally or logically rigorous in your thinking. And most of the time it doesn’t matter how you decide on those things. Whether you decide on fast food for lunch or pack a healthy one, the difference is marginal. No one dies, no one goes to prison, no one has their rights infringed, and if it’s just a one time thing you don’t even really damage your health.

Humans like to imagine that they are intelligent, rational creatures and in many ways we are. Certainly compared to other animals we know of we are at the top of the intelligence pyramid. But that doesn’t make us rational or intelligent. It just means we are compared to other animals. But really, just think of the smartest non-human species out there. How smart are they? It’s a pretty low bar we’re setting for ourselves. That we beat them on metrics of intelligence (which we came up with) doesn’t mean we’re actually smart, just smarter than they are. Even the smartest ape can’t do long division or learn a written language. Those are things the average human child has nailed down by the time they enter middle school.

Try to eliminate your ego as you read this and open your mind to the possibility that you’re not as good a thinker as you believe you are. Your impulse is to fight the idea. “I did well in school!” or “I have a challenging job!” I’m sure you’re right but like most people you are still going to carry countless cognitive biases that distort your ability to think rationally in many situations. These biases affect everyone, even some of the most intelligent among us.

The Puzzles

Try not to look up solutions before attempting these puzzles.

1. Are You Smarter Than 61,139 Other New York Times Readers? — Solution

This is a puzzle that existed before the New York Times, but they provide a graph with the results and it has a large pool of people who have already played so it is a good tool to examine your thought process. It’s sometimes called the “guess the 2/3 of the average” game. Not very clever but at least it says exactly what it’s about.

The game is this: Guess a number from 0 to 100. However the number you choose should one which you believe represents 2/3 of the average of all the numbers chosen by other players. So if you think the average of the other player’s guesses is 75, you should pick 50 which is 2/3 of 75. If you think the average of other player’s guesses is 50 you should be 33 which is 2/3 of 50. (or close to it, 33.3333…)

Remember, the key here is you have to predict what everyone else is going to do. What’s your guess?

— —

— —

The answer is around 18–22. Now the problem with this is that there’s no “right” answer that is always right. It changes based on the people who play. But this is a game that has been run several times by various groups and the result is usually right in there between 18 and 22. What did you guess and why?

The process goes in steps.

- Numbers are picked at random initially. Logic suggests the average hovers around 50.

- Take 2/3 of 50 as per the goal of the puzzle. The result is 33.33…

- Realize that if other people take 2/3 of 50, and make that their answer, you should take 2/3 of 2/3 of 50 which is 22.22…

- Realize that if people did step 3, you should take 2/3 of 22.22 which is 14.81.

- Repeating step 4 until you reach 0.

Take a look at the chart from the New York Times showing their results.

Several things to notice here. First, there’s a huge spike at 33. In fact it is the single largest value guessed. Second there are other spikes at 50, 22, 1, and 0. There is also a small one at 66. There are good explanations for all of this.

No matter your answer, unless you picked 18–22, you’ve shown a flaw in your thinking, though they could be very different flaws.

Numbers above 66 — Even if you assumed every person guessed 100, that would still make 2/3 of their guess to be 66.67. It cannot possibly go higher than that per the rules of the game. Yet you can see some people chose that. That may indicate a complete failure of understanding or perhaps some people didn’t take the contest seriously. This doesn’t invalidate the results because this experiment has been done numerous times. Different experiments have offered cash prizes, charged a fee for entering, and so on. Such barriers discourage people from actively making joke guesses. In all those cases the end result is still around 18–22.

66 — Two thirds of 100 is 66. Anyone who guessed this is showing a huge failure in understanding the game. For 66 to be correct the average of every other person’s answer would have to be 100. That is highly unlikely to say the least.

50 — People who chose this took 0 steps of thinking. The average of randomly chosen numbers between 1 and 100 is 50. But they failed to incorporate the 2/3 rule into their answer.

33 — They did the first step of thinking required. They assumed, logically, the value of randomly chosen numbers would be about 50 and 2/3 of 50 is 33. Clearly this is where the plurality of people stopped their analysis with almost 10% picking this number. The next closest was 1 with barely a smidgen over 6%. Your flaw is not realizing other people would also understand to take 2/3 of 50 and put down 33. You can’t put down what everyone else put down, you have to put down 2/3 of what you believe everyone else will answer.

22 — Some people went a step further. They knew if people chose 33, the right answer should be 22 so they picked 22.

1/0 — This is the “hyperrational” answer. In the case that you assume other people are also hyperrational you should pick 1 or 0 depending on your interpretation of the rules based on the logic detailed in steps 1–5 above. There is a constant pressure to take 2/3 of whatever number you arrive at. And when you get the new number you take 2/3 of that again over and over and over until you can go no further. However if you picked 1/0, you assumed too much. You have to realize that people are not that rational or logical. Your answer assumed hyperrationality on the part of everyone else who answered the question or at least the majority of people answering the question. Clearly we were wrong. (I must, to my great shame, that admit I chose 0 as my answer) The point of the contest was not to find what should be the answer, it was to find the actual answer. People don’t always choose the rational answer and you have to understand that. In fact this experiment demonstrates that most people absolutely do not pick the rational answer. Not taking that into account means you’re not taking reality into account.

Any other number — Chances are with any other number besides 0, 1, 18, 19, 20, 21, 22, 33, 50, 66 you were picking at random. If you guessed completely at random without taking into consideration the 2/3 rule or making any sort of attempt at guessing what other players might pick then you either failed to understand the rules or you didn’t care to follow them or you just had no idea what to do. Either way it shows tremendously flawed thinking.

18–22 — The few people who picked between 18–22 were correct with 19 being the most correct. As you can see it’s slightly lower than 22 which would have been 2/3 of 33, the first step. This is due to all the people picking 1 and 0. The well informed, logical person would have understood that most people do not go all the way to 0 as logic would dictate rational people should do. They would know most people go only 1 step. And they would know some people would go all the way, making the number less than 22 but perhaps by only a little bit.

Less than 12.5% of all 60,000+ contestants answered within the 18–22 range. Even if you add the hyperrational people it’s less than 25% of people who went further than the first step of thinking.

Why this matters:

In life we often assume everyone else knows what they’re doing and is doing it for a rational reason. This is dangerous. Think about politics for a second. Assuming everyone has a rational reason for what they do leads to some dark places. Lots of people’s personal politics just aren’t that well thought out. They may be causing you harm with what they believe and how they vote but chances are they aren’t doing that intentionally. They just haven’t thought their politics through all the way. If you go around assuming everyone knows the consequences of their politics you end up having to assume when those politics go wrong that those people knew it would go wrong and wanted it anyway. “Never attribute to malice that which can be sufficiently explained by ignorance.” It may not seem like a big difference but it is. Your response is different to those you believe are trying to harm you and those you believe just don’t see understand effects of their actions.

Only 12.5% of the contestants got the answer correct. That means the vast majority of people, likely including yourself (and me), weren’t that good at understanding the intentions or thoughts of others. Going back to politics, people have a strong tendency to see themselves as having figured it all out while everyone else is being stubborn or idiotic. 87.5% of people got this wrong. The chances are high you are among that group. Assuming you know and other people don’t is a vanity most of us are incorrect about. Combined with the first point it leads to a pretty dysfunctional political atmosphere where we assume the absolute worst about everyone but never open our minds to the possibility of being wrong ourselves. Think about how much progress could be made in an environment like that. Does it seem likely?

2. Bayesian Probability — Explanation

Consider the following situation:

- 1% of people develop cancer (and therefore 99% do not).

- 80% of cancer screening tests detect cancer when it is there (and therefore 20% miss it).

- 10% of cancer screening tests detect cancer when it’s not there (and therefore 90% correctly return a negative result).

If you get a positive result back from a test, what are the odds are that you actually have cancer?

— —

— —

The correct answer is 7.48%. You have a mere 7.48% chance of having cancer given those conditions. If you’re like most people you probably guessed somewhere between 80% and 70%. This is different from the first puzzle. It’s not about levels of thinking, not really. Most people just subtract the false positive rate from the detection rate and call it a day. That’s not the zero step, first step, or second step. It’s not a step at all. Doing it that way is completely wrong from the start. You need to calculate the Bayesian probability.

Chances are you don’t understand why that formula is what it is. That’s okay because the website betterexplained.com does a good job of explaining the reasoning. (Imagine that) I’ll try to condense it below in my own way.

Without doing the math, you can sort of get an understanding for why the number is so low by thinking about it like this. You don’t know whether you have cancer or not before the test and you still can’t be sure after the test. Before the test there was only a 1% chance that you had cancer and if you were that 1% who did, the test would have an 80% chance of detecting it.

But the odds were significantly higher, 99%, that you wouldn’t have cancer. And even though the false positive rate is only 10%, that means out of that 99% of people who don’t have cancer (which still includes you) 10% of the them will get a false positive reading back. Which is the bigger possibility? That you are of the 99% with a 10% chance of a false positive? That group is 9.9 people. Or that you are the 1% with an 80% chance of a true positive? That’s only 0.8 people. You are far more likely to be among the false positive group.

The math can be helpful though so let’s math it out.

First, the way to find the probability of getting a true positive result when you actually have cancer is to find the total possible chance of a positive result and compare it to the true positive rate. That means you have to look for not just the true positive rate, but also the false positive rate. You need both in order to know what your chances are of getting a positive result whether true or false.

Remember, you’re not looking for the true positive rate for when you have cancer.

You don’t know whether you have cancer or not. That is the point of having a cancer test, to find out. If you only take the true positive rate for the 1 out of the 100 people who have cancer, you are inserting knowledge that you don’t have, that the 1 person you are testing has cancer.

- Out of those 100 people, how many times are you going to get a true positive result? You can find that by multiplying the 1% actual cancer rate and the 80% true positive rate which leaves you with 0.8%. That’s the first part.

- The second part is to find the false positive rate. So let’s do the same thing we did directly above. Multiply the 99% no cancer rate with the false positive rate of 10%. This leaves you with 9.9%.

- Add the true positive rate and the false positive rate for your total positive rate which comes to 10.7%.

- What percent of the time out of that 10.7% will you actually have a true positive? Well you divide the true positive rate of 0.8% by the total positive rate of 10.7% and get 7.48%.

7.48% is a far cry from the 70%-80% that most people guess. The key here is the low base rate of cancer occurrence and the false positive rate. The low base rate means that despite a significantly low false positive rate, you are bound to get more false positives than true positives. For every 100 people, 99 will not have cancer and 10% of them will have a false positive. For every 100 people, 1 has cancer and 80% of them will have a true positive.

If you look at just the low false positive rate and the high true positive rate without adjusting for low base rates, you will vastly overestimate the reliability of the test.

This is a huge problem. Our flawed intuition suggests to us that at the very least, the test should be more likely to be right than not. Yet the complete opposite is true. It is not the difference between being 90% accurate and 65% accurate, which while huge, still shows the test to be somewhat useful. It is the difference between being right most of the time and being wrong more than 90% of the time. Quite a swing.

Why this matters:

People are terrible at statistics more complicated than a coin flip. Actually, even with coin flips we have trouble. Lots of people fall prey to the gambler’s fallacy. “I got 5 heads in a row, the next one must be tails!” The chances the next one is tails is still 50:50. Just watch someone at a casino who thinks their bad luck streak has to come to an end because they’ve lost so much already. This kind of illogical thinking has real consequences.

We also see our faulty statistical intuition lead not just to personal loss but to legal injustice. A law professor at the University of Michigan has used Bayesian probability to show that we have almost certainly been sending innocent parents to prison over Shaken Infant Death Syndrome. (page 289) Our lack of understanding of low base rates and false positive rates has resulted in innocent people going to prison, wrongly found guilty of murdering their own child. Imagine the pain of losing one’s child, being accused of murdering that child, being found guilty for their murder, and then having to endure prison. Imagine the other parent who remains outside of prison, wondering whether their spouse really did commit the murder. Imagine that parent’s pain, imagine their unnecessary guilt at how they might have foreseen their spouse’s violence. Now both parents are separated and alone, losing a newborn child and their life partner in one fell swoop. Our terrible statistical intuition has dire consequences.

I said before that even the most intelligent of us are not immune. Well, trials are done by highly educated lawyers and judges. Lawyers on both sides call expert witnesses like doctors to testify about what they think happened in such cases. None of those people understood what was actually going on. Their misunderstandings led to the anguish described above, compounding an already tragic situation.

We can also take a look at stop and frisk policies in various cities and municipalities across the United States. Police stop people, supposedly under the suspicion of having drugs or firearms, and search them. Police are wrong more often than not about the suspect having drugs or firearms.

An analysis by the NYCLU revealed that innocent New Yorkers have been subjected to police stops and street interrogations more than 5 million times since 2002, and that black and Latino communities continue to be the overwhelming target of these tactics. Nearly nine out of 10 stopped-and-frisked New Yorkers have been completely innocent, according to the NYPD’s own reports

Is there any justification for trampling on citizens’ 4th amendment rights from unreasonable searches and seizures when the police’s own statistics show they are far, far, far more likely to be wrong than right, by a ratio of 9:1? The vast majority of the people stopped are completely and utterly innocent of any wrongdoing. They don’t even get a ticket or a citation.

You could even apply this to our search for terrorists. The terrorist base rate is incredibly low. Let’s look at just Islamic terrorism in the United States. There are over 3,300,000 Muslims in the United States at present. In October of 2015, FBI direct James Comey said that “federal authorities have an estimated 900 active investigations pending against suspected Islamic State-inspired operatives and other home-grown violent extremists across the country.” Divide 900 suspected operatives by 3,300,000 total Muslims and you get a base rate of .0273% Muslims in the United States being terrorists. And that’s if you assume all the suspected operatives being tracked are actually terrorists. Let’s even assume that the federal government is underestimating and undertracking potential Muslim terrorists by a factor of 10. That’s still only .273% of the Muslim population as a base rate for terrorists. That’s not even 1% of Muslims. It’s not even half a percent of Muslims. It’s slightly over a quarter of a percent. And that was if we assumed the number was 10 times higher than the suspected targets. Not even proven targets.

Which isn’t to say we shouldn’t be tracking people we have good reason to be suspicious about. But when you have people like Donald Trump saying we need to stop all Muslim immigration or Ted Cruz saying we need to patrol Muslim neighborhoods based on less than half a percent of Muslims being potential terrorists? Doesn’t that seem like we might be selling our civil liberties out too quickly?

Shall we try the math?

- Let’s go with an even 1% of Muslims being terrorists, despite the fact that as we already know, it is entirely too high.

- Let’s assume our terrorist identifying algorithm has a 99% true positive rate.

- Let’s assume it has only a 5% false positive rate.

- 1% (Muslim terrorist base rate) * 99% (true positive rate) = .99%

- 99% (Muslim non-terrorist rate) * 5% (false positive rate) = 4.95%

- .99% (true positive while terrorist rate) / 5.94% (total positive rate) = 16.67%.

- 16.67% of the time our 99% “accurate” algorithm identifies a target, that person is actually a terrorist.

Remember, that is using very, very, favorable numbers. An incredibly high true positive rate, an incredibly low false positive rate, and a massively inflated base rate. The reality is it is likely to be significantly less accurate than 16.67%.

Is that encouraging news? Does profiling seem justified in that case? Do Donald Trump and Ted Cruz seem reasonable given such data?

This kind of analysis can be applied to basically all stereotypes. We take them for granted that they are true but it is highly possible that those stereotypes are wrong more often than they are right. When was the last time you did the math on stereotypes?

3. The Monty Hall Problem — Solution

You’re on a game show. There is a host and three doors. Behind two doors is nothing and behind the third door is a prize which is whatever your heart desires. You pick a door, any door. The host now opens one of the two remaining doors revealing nothing behind it. This is always the case. The host will never pick a door with the prize behind it. There are now two doors left unopened, one of being the door you chose at the start and the other being the door the host chose not to open. You now have the opportunity to swap doors to the other unopened door. Do you swap or do you stay?

— —

— —

You should swap.

For those of you who said stay, does getting a second chance to think about it make you realize the reason for your error? For those of you who said swap, can you explain why or was it just a gut feeling? You should know that most people elect to stay. If you are like most people you felt that once it came down to two doors your odds were 50:50 and it didn’t matter whether you swapped or stayed.

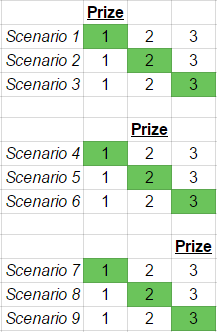

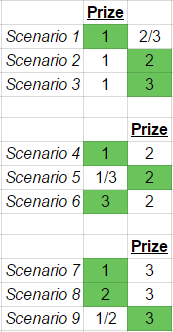

Study the following chart. The green squares represent your picked doors. Your three choices and the possibility of the prize being behind any of the 3 doors means nine total possible scenarios. If you think that this means at this point you have a 1 in 3 chance of correctly guessing the prize, you are correct. Notice that the green square representing your pick coincides with the prize door 3 times out of 9 total scenarios.

Now start eliminating doors as the host. This second chart shows the doors the host can open. The reds show which doors he must open and the yellows represent where the host must choose between two doors. He must open exactly one of the two yellows. And as a reminder, the host may not open the door with the prize behind it.

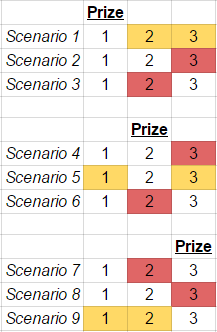

Now redraw the scenarios now without the doors that the host has eliminated. All the red doors must go. One of the two yellow doors must go. At this point you’re left with a choice to swap or stay. You look at the doors and if you’re like most people you see two doors, one with the prize and one without and think your odds are 50:50. Most people elect to stay because it’s their first choice and they think it doesn’t matter if they swap or stay. If you thought that you are wrong, like most people.

The chart above is the redrawn scenarios with the doors eliminated. Try counting the number of times you picked the right door, colored as green. In 6 of 9 scenarios, you picked the wrong door. This should be expected, right? After all your initial decision was made when you had a 1 in 3 chance of guessing correctly. It’s no surprise therefore that you would have been wrong 2/3 of the time.

But that means your chances aren’t 50:50! There are nine scenarios. In scenarios one, five, and nine, you would lose by swapping because you had already chosen the correct door. In the remaining six scenarios, swapping gets you the prize. Let that sink in. That would mean that 3 out of 9 times swapping loses you the prize but that 6 out of 9 times swapping gets you the prize. The odds aren’t 50:50, they’re 3:2 in favor of swapping.

The flaw here is that you failed to take into account what the host knew. In game theory, accurately knowing or estimating how and why other players in the game will act is a key element for informing your own actions. This goes right back to the first puzzle involving the guessing of 2/3 of the average.

Think about how you would win that first puzzle. If you knew exactly how everyone else was going to guess it would be impossible to lose. You can’t know exactly so the object is to see if you can reason your way to an estimate of what you think they will do. Either way the point is to try and understand the mind of the other players. You want to figure out what they know, what they think you know about what they know and on and on down that rabbit hole.

The Monty Hall problem only has one level. The host knows which door has the prize and which doors do not. He must know that because he always picks a door without the prize. If you realize that, you are getting to know the mind of the only other player in the game. When you incorporate that knowledge into your decision making you increase your odds from 50% to 66.67%.

Try this with friends and family. Run them through the game 100 times or use the internet version I linked above and here and test it yourself. Choose to swap every time and see how often out of 100 times it results in a prize. Or choose to stay every time and see how often you don’t get a prize. I admit that I didn’t understand this until it was explained to me. Afterwards it was obvious. But it certainly isn’t intuitive for most people.

That’s right, for those of you who guessed wrong, I did tell you that you wouldn’t be alone, didn’t I? Only 13% chose to switch so we’re right there with most of humanity. Pretty incredible. It gets even better.

I would never lie to you and I surely wasn’t lying when I said even the smartest among us are susceptible to flawed thinking. Not even Nobel physics laureates and PhD’s are immune. Just look at some the mail received by Vos Savant. People with doctorates from various universities all across the nation wrote in, adamant that Vos Savant was wrong in her math. Here are just a few:

You blew it, and you blew it big! Since you seem to have difficulty grasping the basic principle at work here, I’ll explain. After the host reveals a goat, you now have a one-in-two chance of being correct. Whether you change your selection or not, the odds are the same. There is enough mathematical illiteracy in this country, and we don’t need the world’s highest IQ propagating more. Shame!

I have been a faithful reader of your column, and I have not, until now, had any reason to doubt you. However, in this matter (for which I do have expertise), your answer is clearly at odds with the truth.

I am sure you will receive many letters on this topic from high school and college students. Perhaps you should keep a few addresses for help with future columns.

You made a mistake, but look at the positive side. If all those Ph.D.’s were wrong, the country would be in some very serious trouble.

All four of those people who wrote in had PhD’s and that’s not even all of them. Notice how much conviction they have that she is wrong that you should swap doors. See how rude some of them can be even though they are undeniably and provably wrong. We can prove them wrong not just with math, not just by drawing out the scenarios, but also just by actually doing the game over and over. If you guessed wrong you have some very intelligent and well-educated, albeit rude, company.

Why this matters:

As with the first puzzle, we can see that we often fail to accurately account for what other people know and how they think. We were told enough information that we should have realized the host knew where the prize was. We should have taken that into account. We either didn’t do that or didn’t even know how to do that. Either way, it’s not a flattering result for humans. Being able to accurately gauge other people’s intentions and knowledge is a huge part of determining our own optimal course of action but these puzzles show how exceedingly bad we are at that exercise.

It also yet again shows that most humans are just awful at statistics and probability. This is a much simpler statistical problem than Bayesian probability yet it eludes just as many people, even highly educated and intelligent people. Even people trained and educated in physics which requires a great deal of math. I have absolutely no doubt that if you sat those people down and went through the math with them they would realize their error.

But they didn’t sit down and do the math. They relied on their gut and intuition. How often do we rely on stereotypes without ever checking whether they’re reliable or not? How often do we use a gut feeling to judge a person or idea instead of doing the more difficult task of a proper, objective evaluation? Our intuition is terrible. We rely on it to our detriment and to the detriment of others. We must recognize this fact and address it in our private and public lives.

Common Wisdom is Often Not Wisdom at All

My intention here isn’t to call everyone dumb. It is to make you realize that all of us, including myself, cannot just assume we know what we’re doing. We cannot assume that just because we are at the top of the intelligence ladder on our lone, solitary planet that we are actually rational or logical most of the time. We must recognize that that all of us are given to enormous cognitive biases, even on relatively simple ideas which after an explanation seem obvious.

That is why science is so important. Science is the discipline where everything is rigorously tested to see if it holds up to scrutiny. We take things we suspect may be true or false and we test them over and over to see if they actually are. We not only test to see if it works, we test to see if there are times when it doesn’t work. Sounds a little bit like Bayesian probability, right? Even the most basic ideas that we take for granted must be subject to such experimentation. For how long did we just assume that time moved at the same speed for everyone? We now know that it does not and we use that knowledge nearly every day when we use our global positioning systems. I personally would be figuratively and literally lost without it. Thank you for relativity, Albert Einstein.

This isn’t just academic or theoretical. We waste a lot of time, money, and effort introducing inefficiencies because of these biases. Think of all the innocent people held up by police who didn’t get so much as a ticket. Millions of stops over a decade. How much money did we waste having police do that when nearly 90% of people were innocent of everything? Could we have put those police to better use elsewhere? Surely there were actual crimes to solve? We ruin a lot of lives. Think of the wrongly convicted innocent parents and their loved ones who will never really know if their child’s death was an accident or murder.

You must do the math.

You must run the tests.

You must verify that your gut feelings are actually supported by the facts.

If we don’t use our ability to do those things, we might as well be just the smartest ape in the zoo.